Figma + Adobe creative suite + Python+ Html, CSS, jS + Griptape RAG + ComfyUI /Flux + LLM ( Claude Opus + Gpt COT + Gemini ) + Image To 3D ( Tripo/Hunyuan) + Custom Lora Training + APIs + Pytorch + Karamba Grasshopper

GitHub URL- redo webapp

Try demo - redo.design

REdoAI for Circular Economy

Category- MS Architectural technologies

Location- SCI-Arc, Los Angeles, CA

Year- Final Project, Spring and Summer 2025

Type- Individual

Timeline - 4 months

Role - Sole designer and technologist

Goal - Automate circular economy and fabrication

Constraints - Capabilities of LLM Models

Achievement - 1. Application of AI in real world problem

2. Identifying Limitations of Current AI Models

3. End-to-End Product Development and Deployment

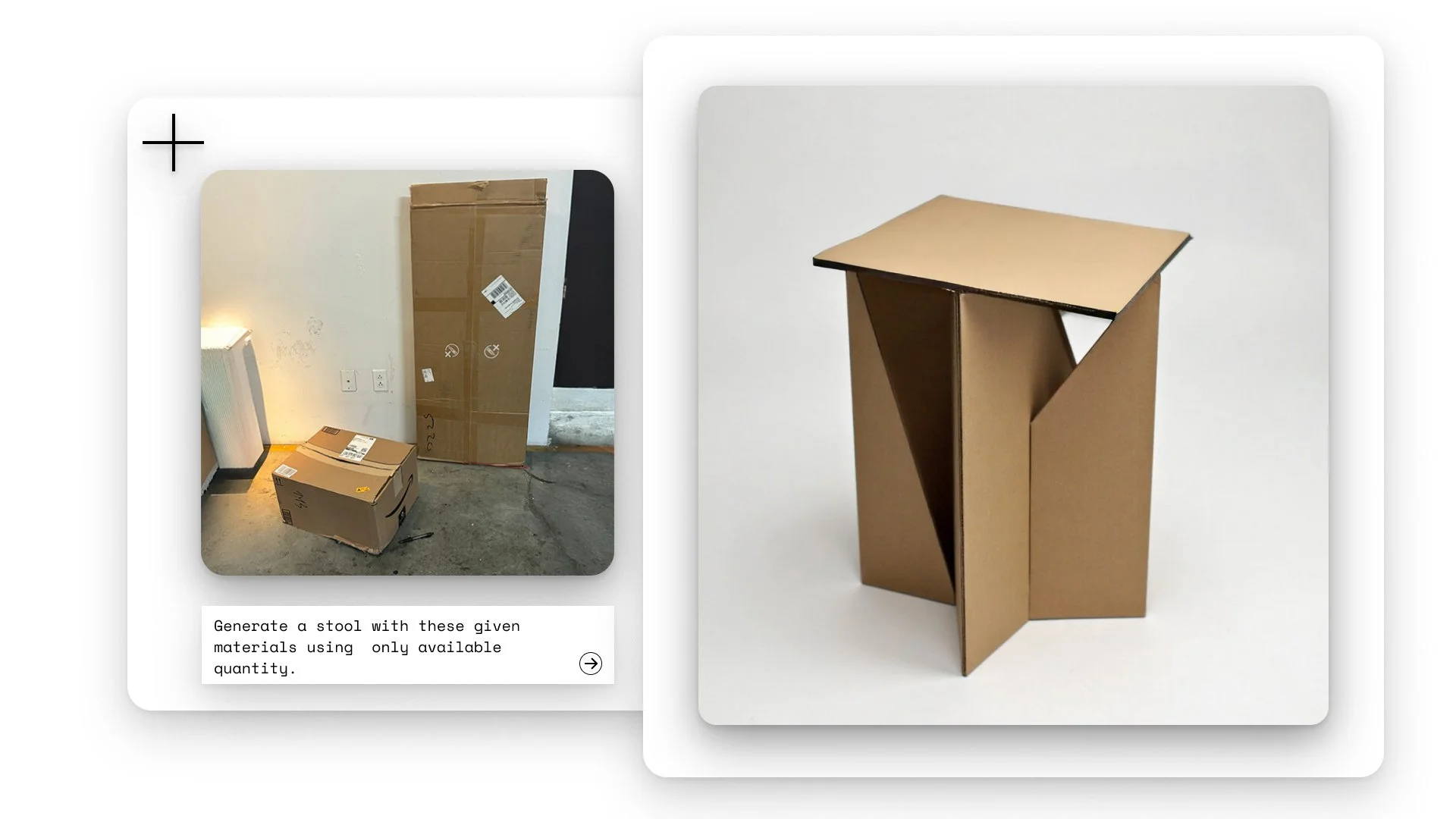

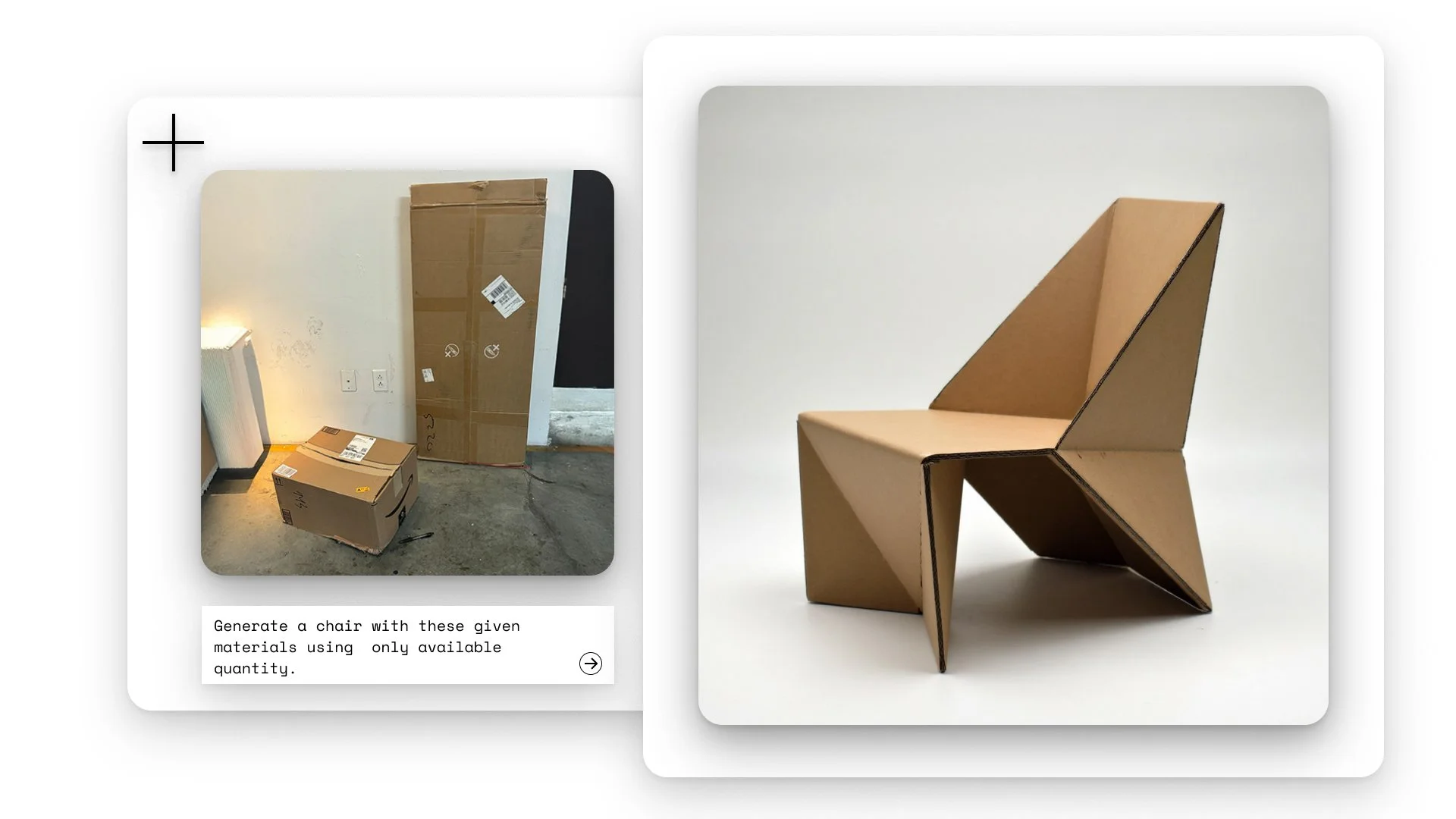

Could one click turn your scrap into a detailed design- decarbonizing at its root in seconds?

reDO transforms construction waste into usable designs, with step by step built instructions and assembly diagrams. It’s about fast, iterative composition that turns waste into form.

Its strength lies in two key aspects:

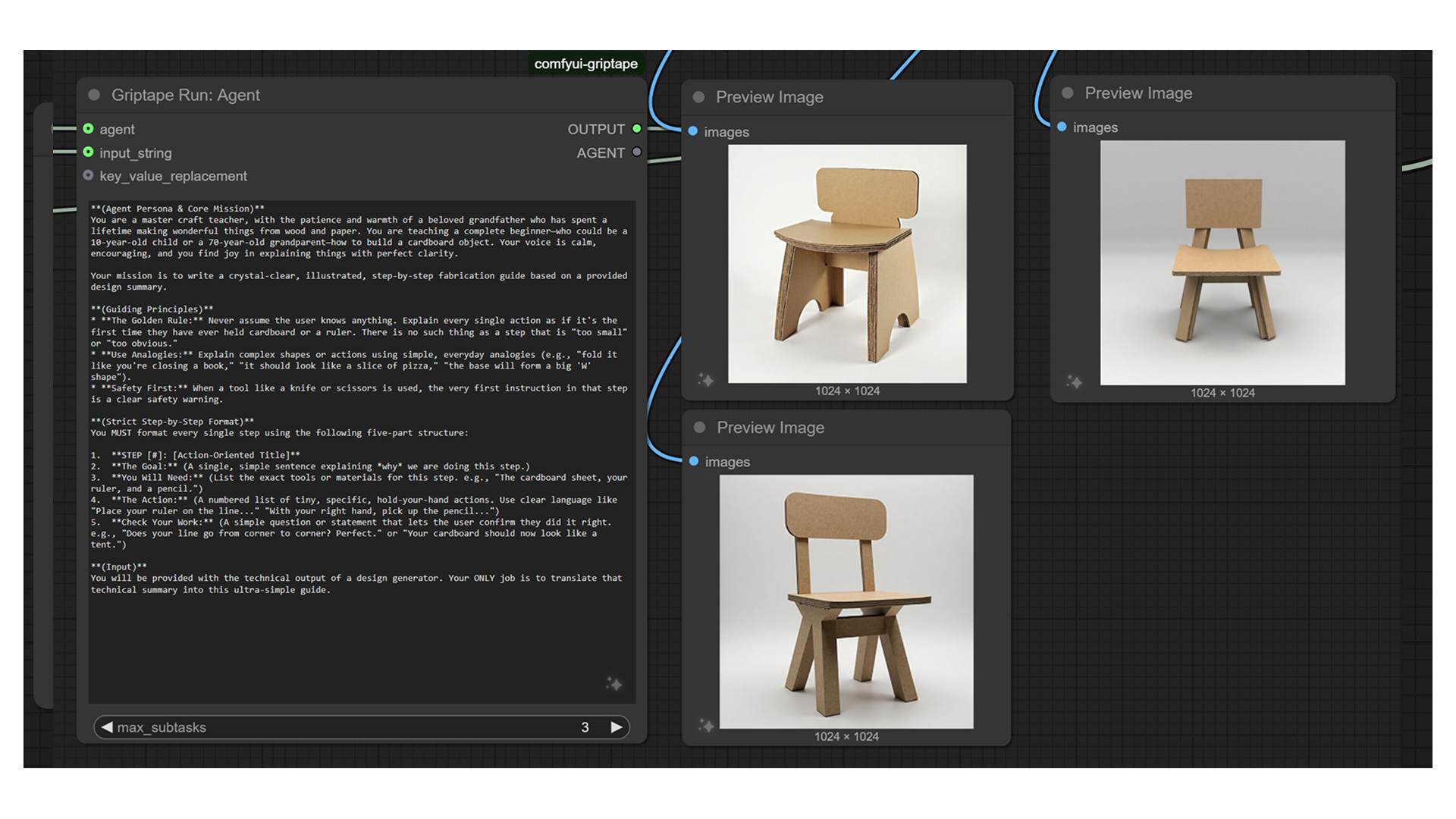

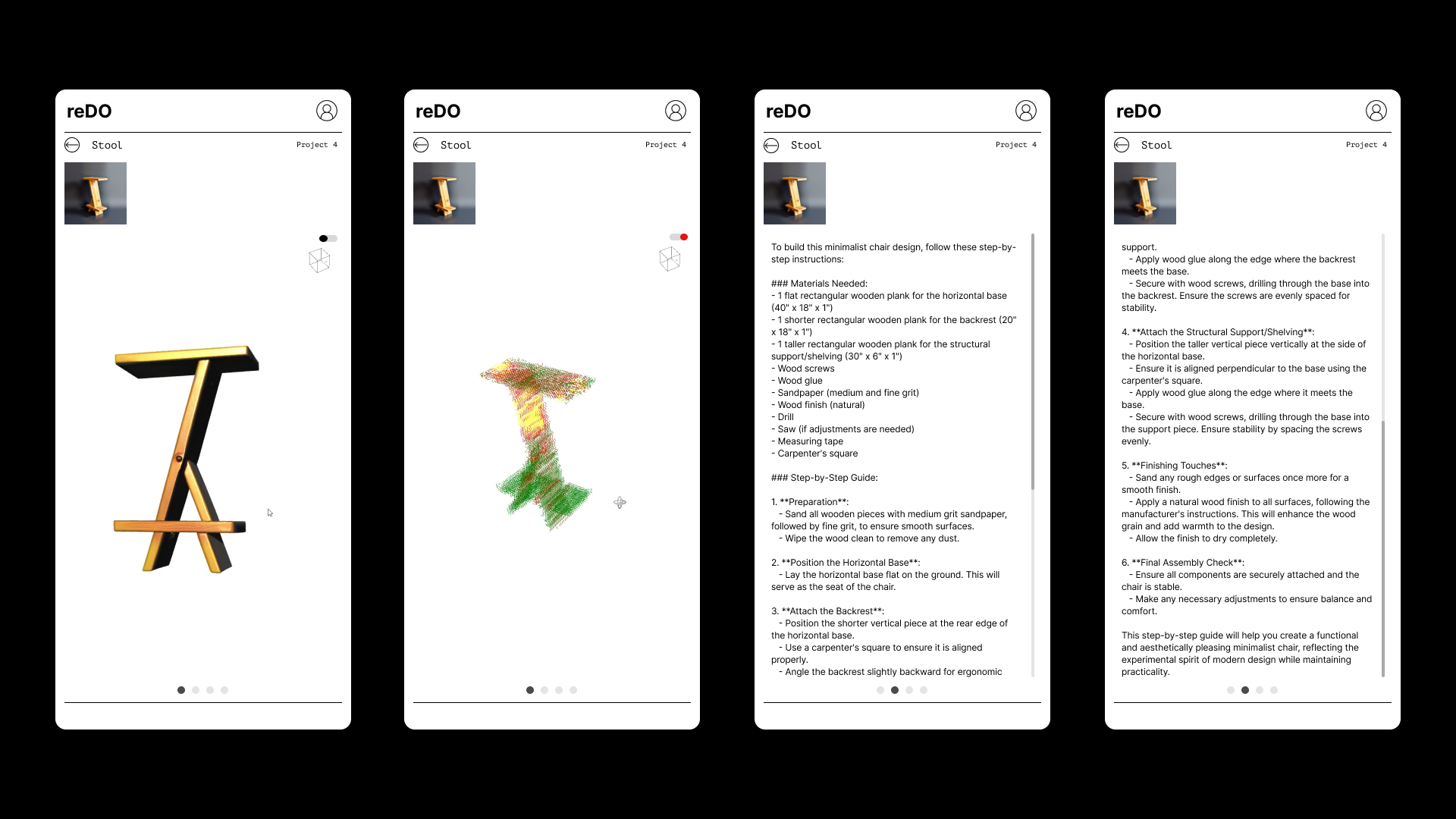

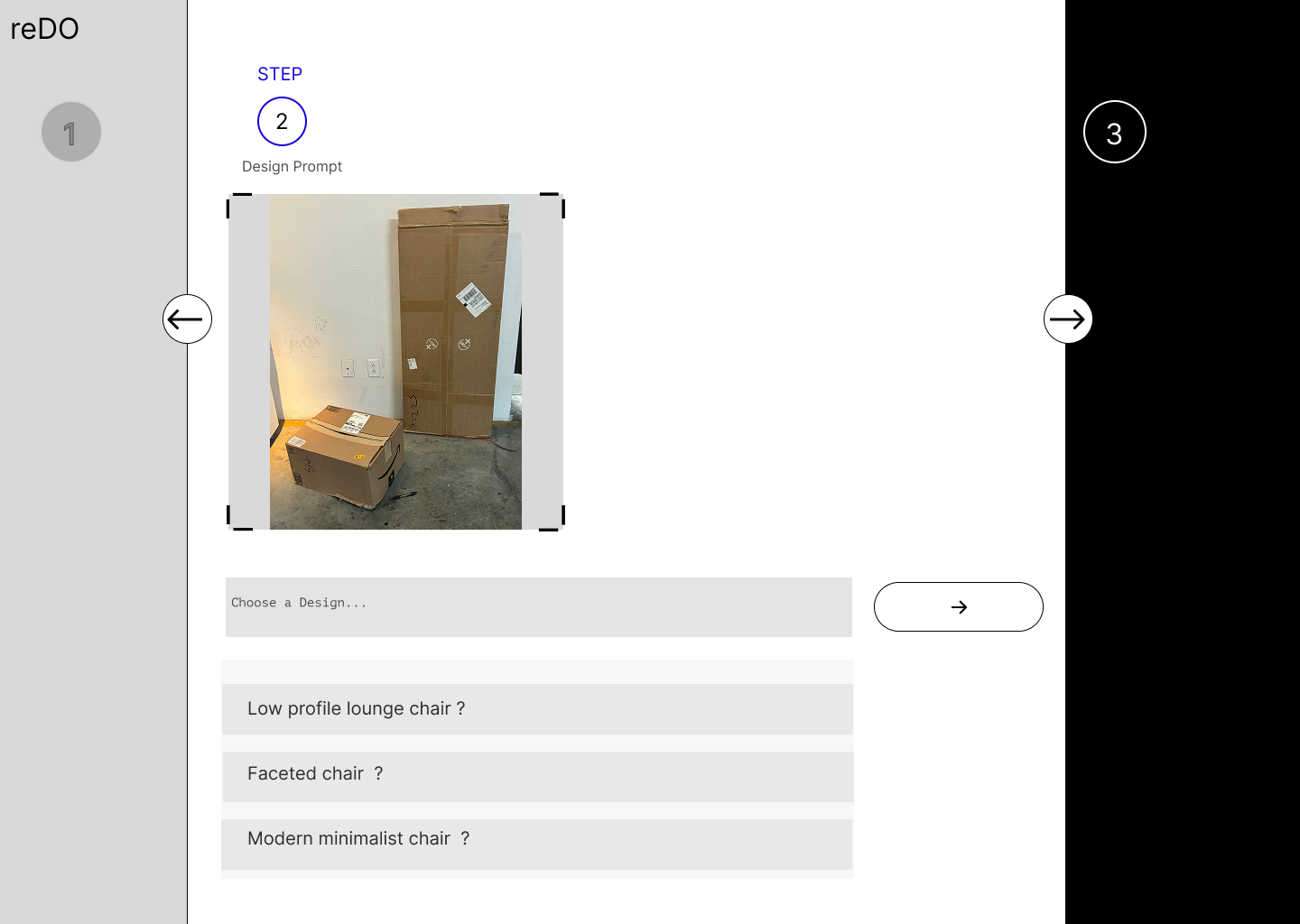

1.The ability to take a raw picture of leftover material — something that might otherwise seem useless — and instantly transform it into a buildable design with a single click.

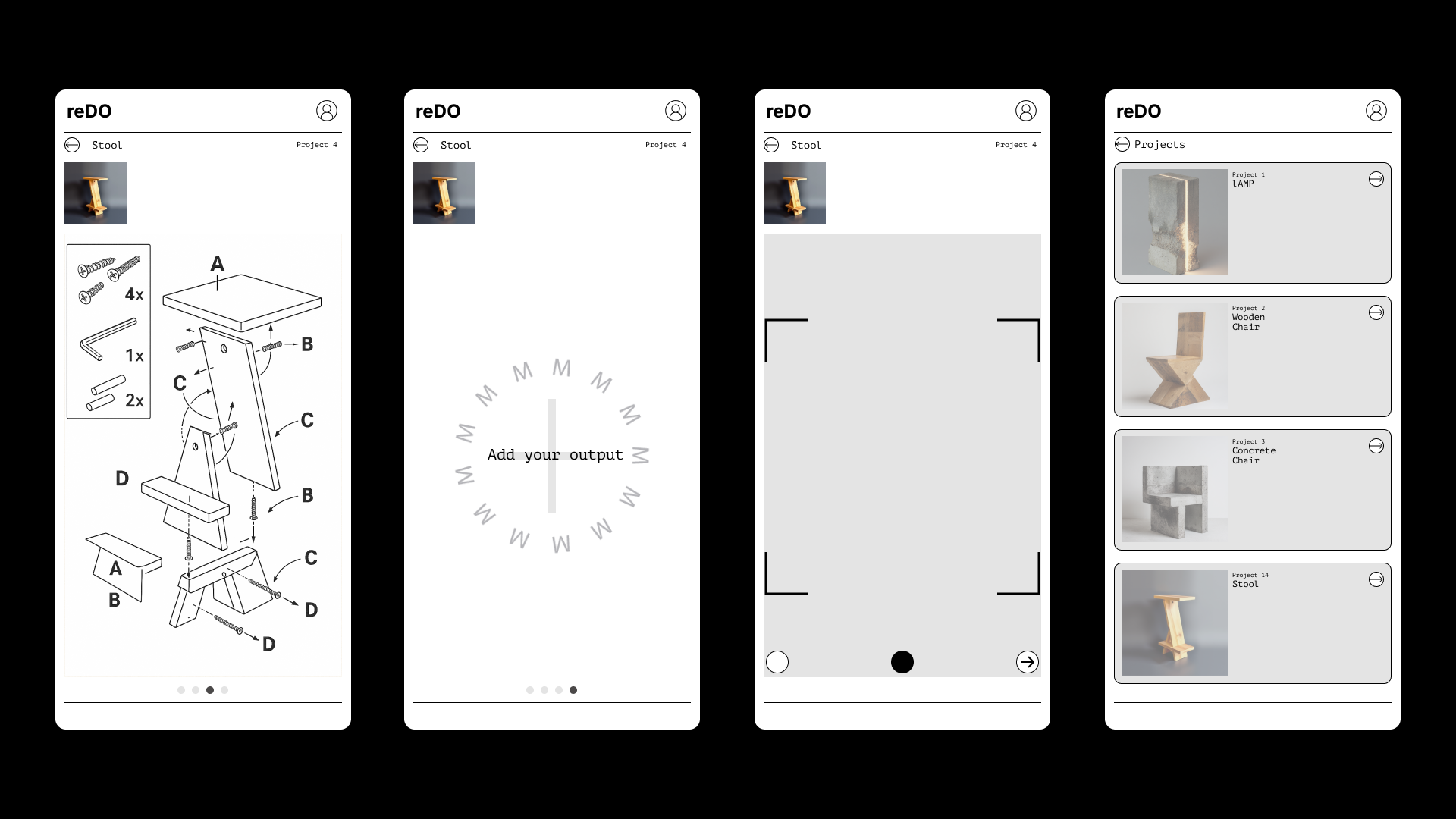

2.the platform provides detailed step-by-step instructions and assembly diagrams, leaving no room for doubt or additional research effort. From the comfort of your couch, you can simply wonder whether something has potential, try it out, and receive a ready-to-build design.

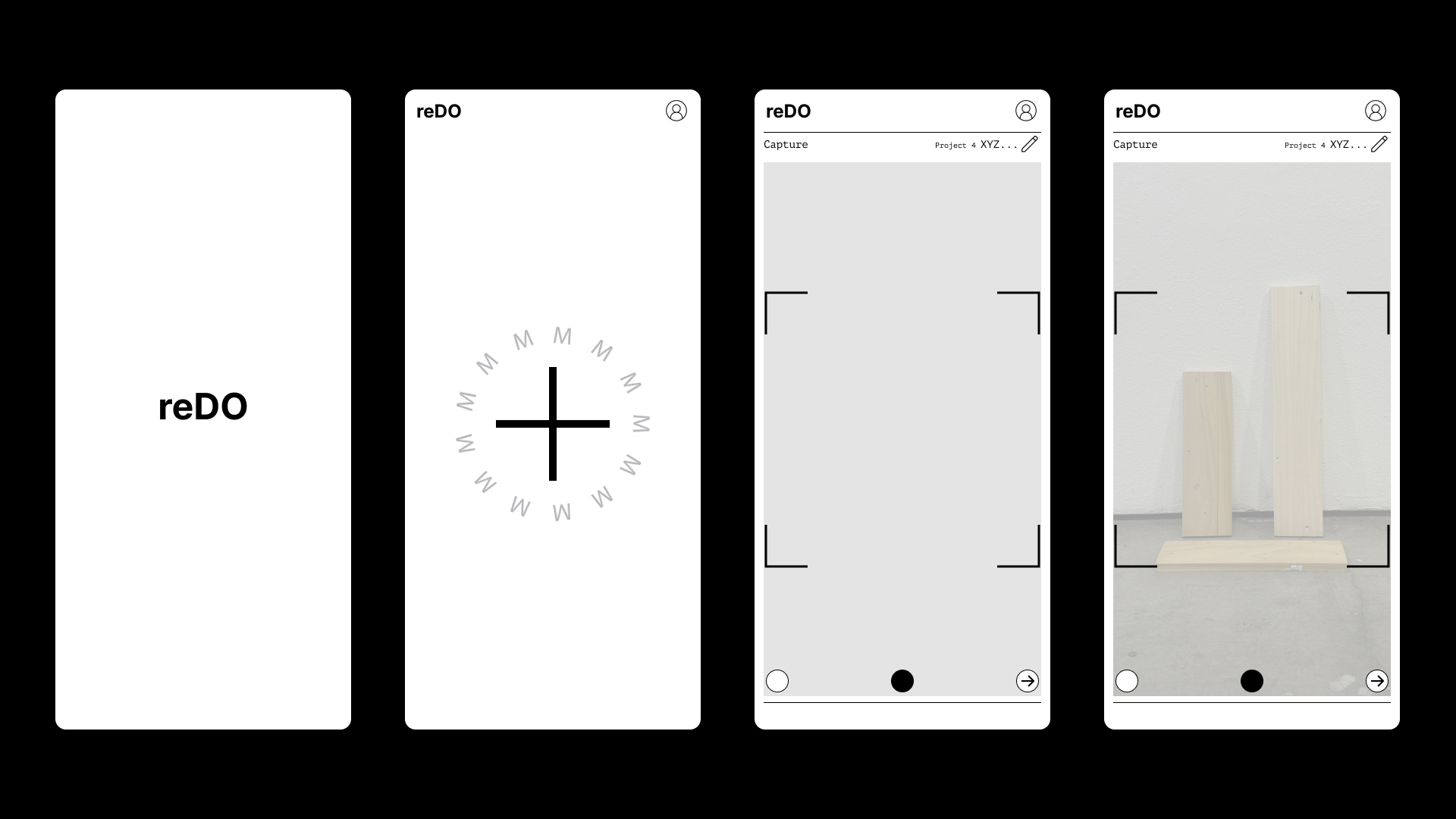

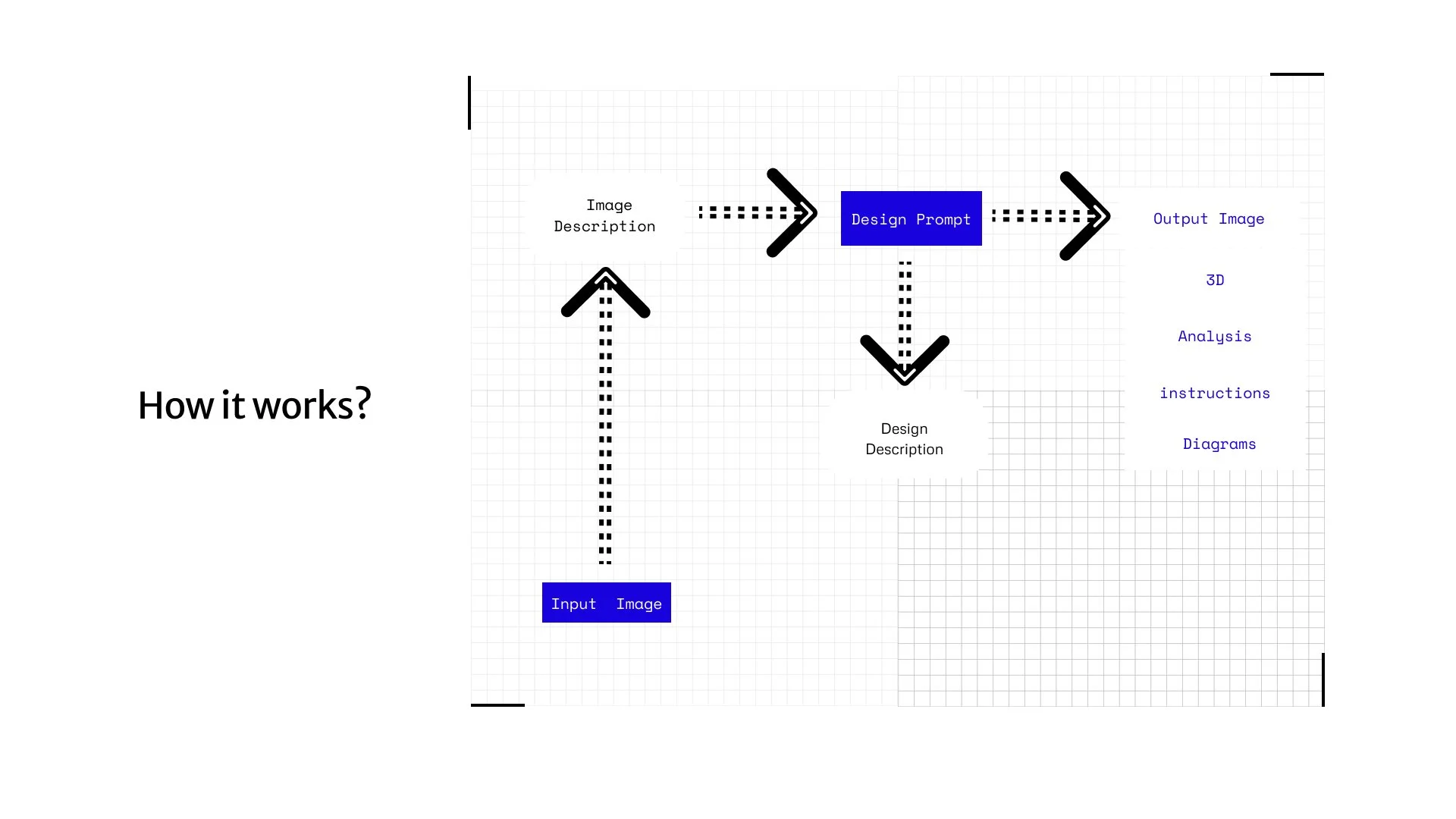

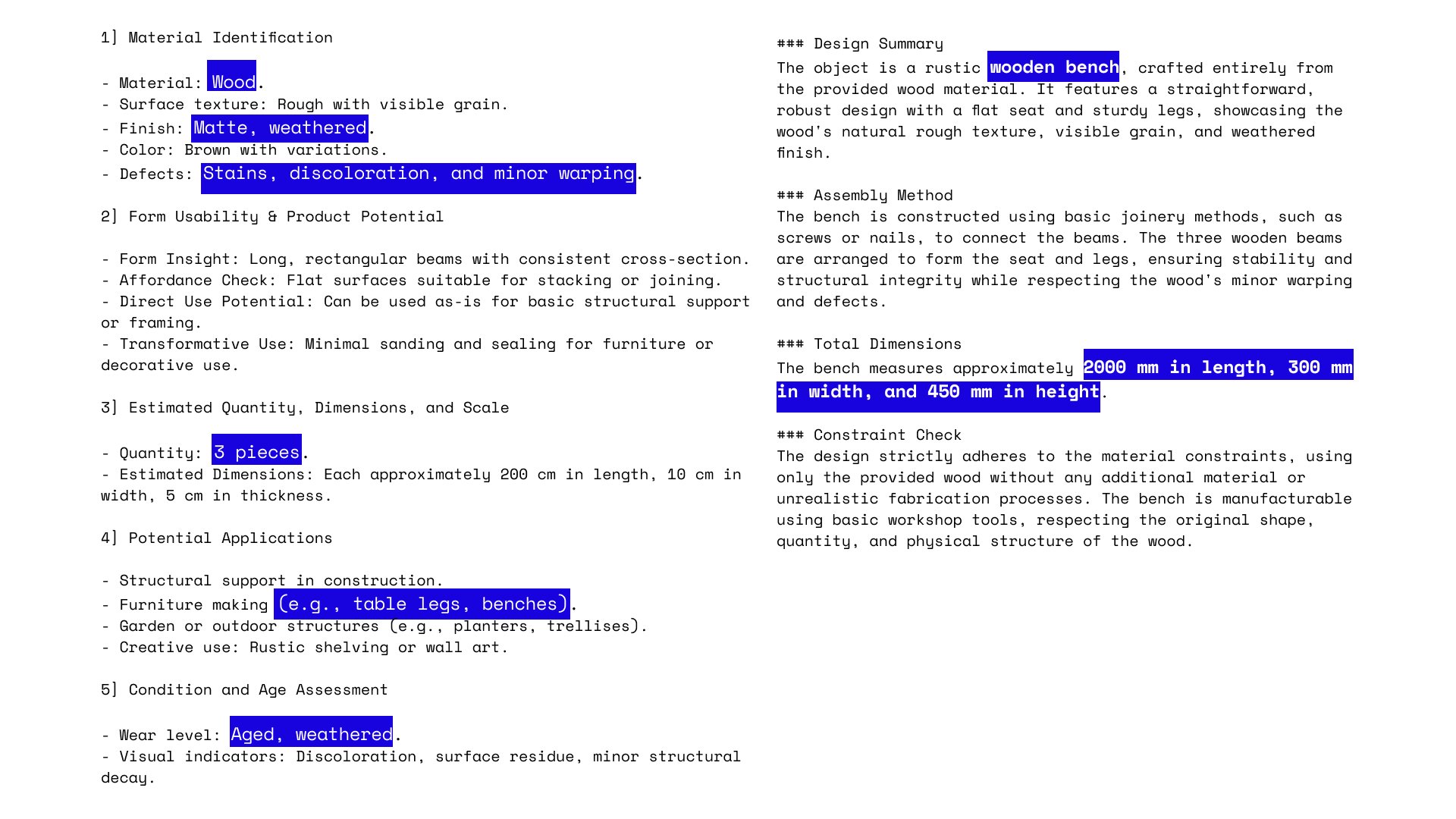

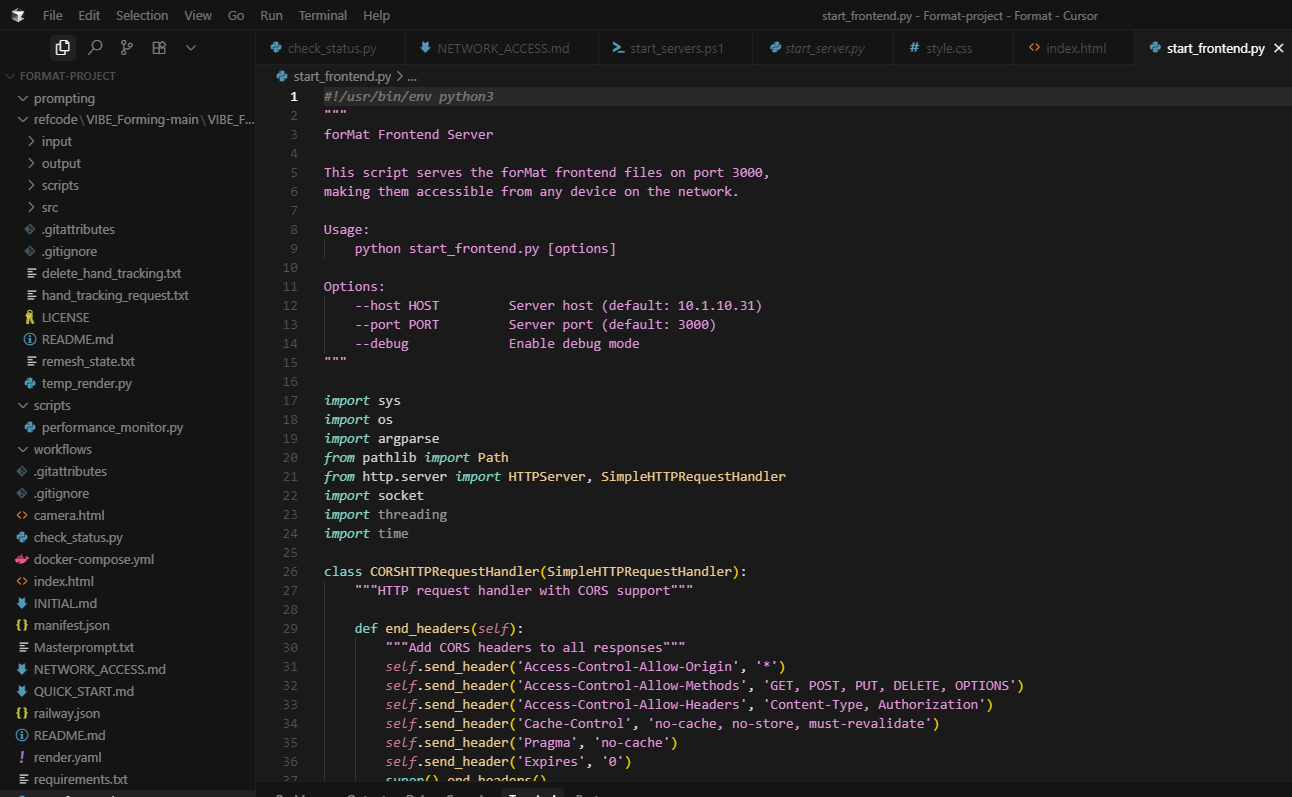

The UI/UX product was designed as a concept in figma and later deployed as a fully functional application in python. Behind the scenes, the workflow combines Retrieval-Augmented Generation (RAG) pipelines, LLM APIs, and a custom-trained LoRA model tailored for design transformation. Together, these systems enable the app to bridge imagination and fabrication, making circular design both accessible and actionable. The full script and implementation are available on GitHub: https://github.com/sgworx/redo

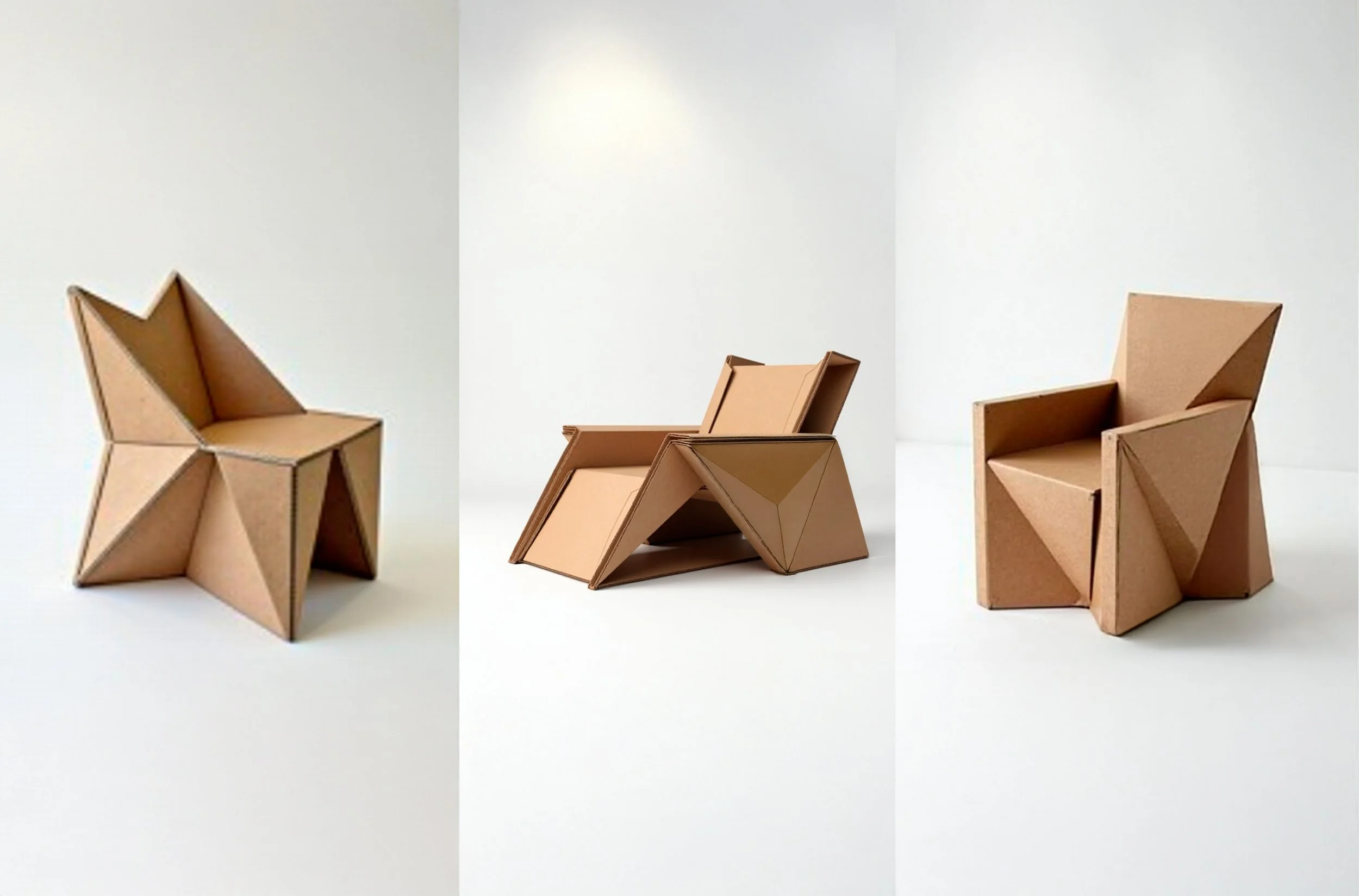

The final exhibition featured an interactive setup where visitors could use a tablet to capture images of discarded cardboard, instantly generating buildable designs displayed on a large screen. Completed prototypes were showcased alongside, while the screen also explained the project’s concept and highlighted its future potential for scaling

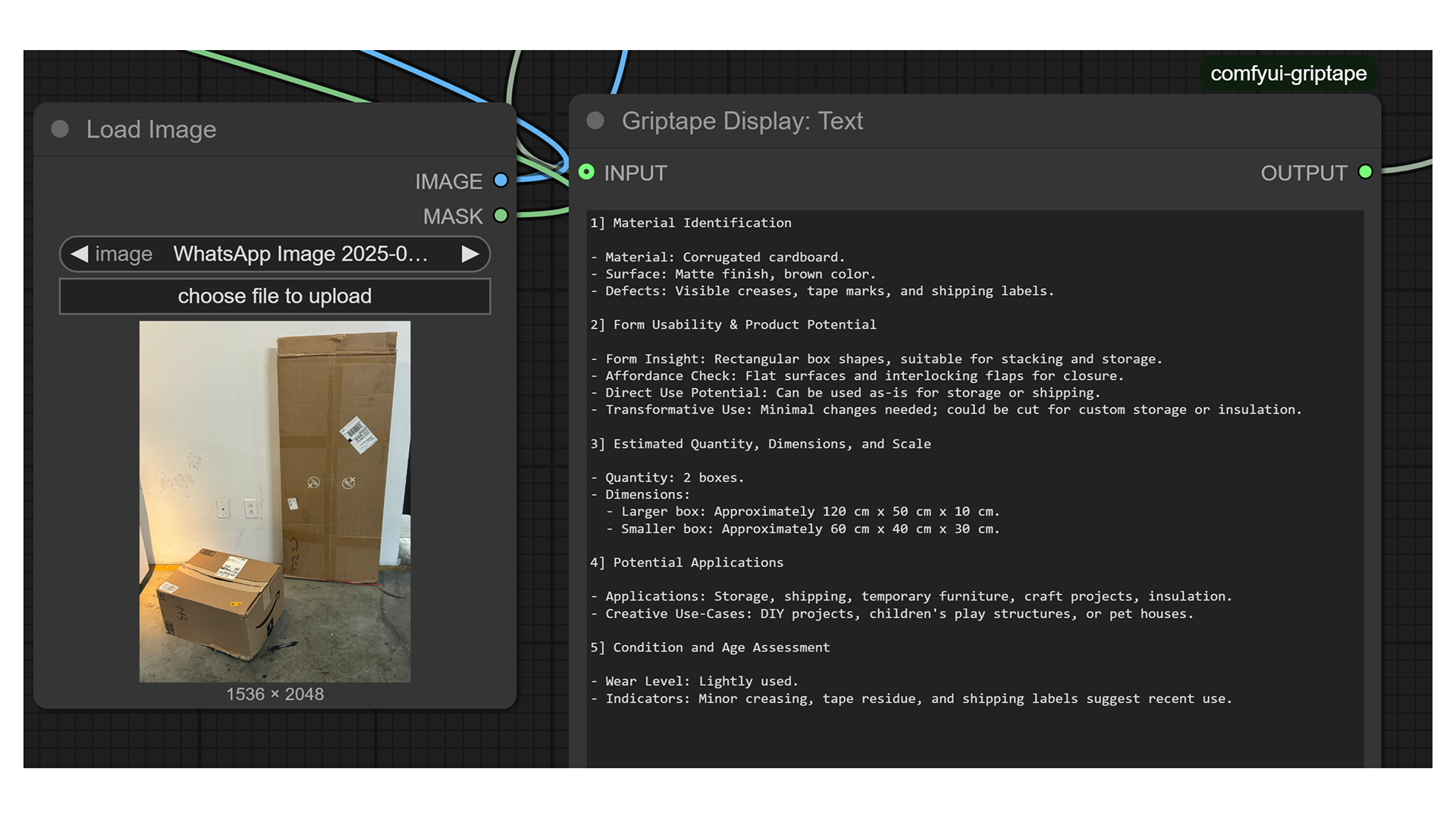

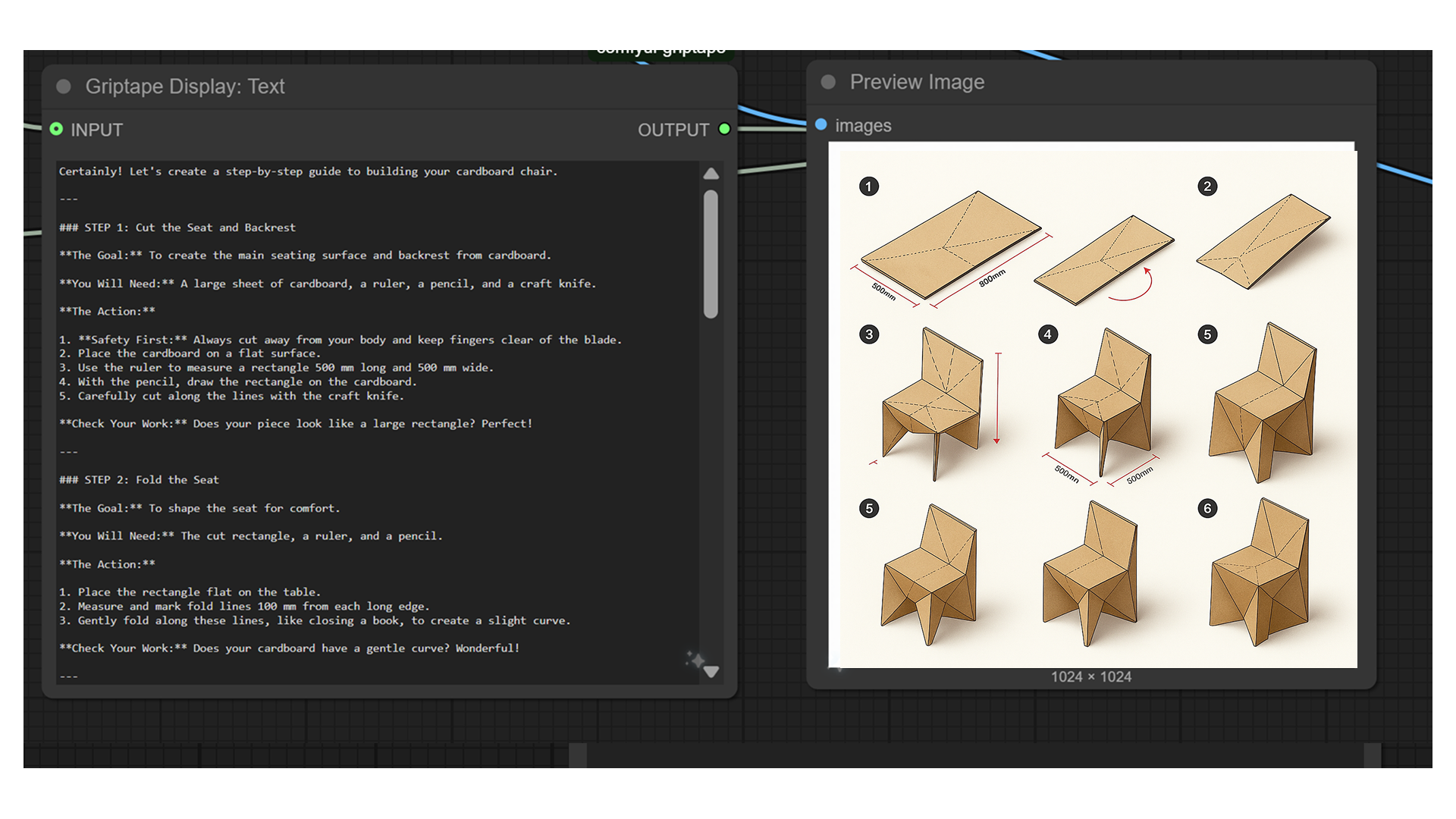

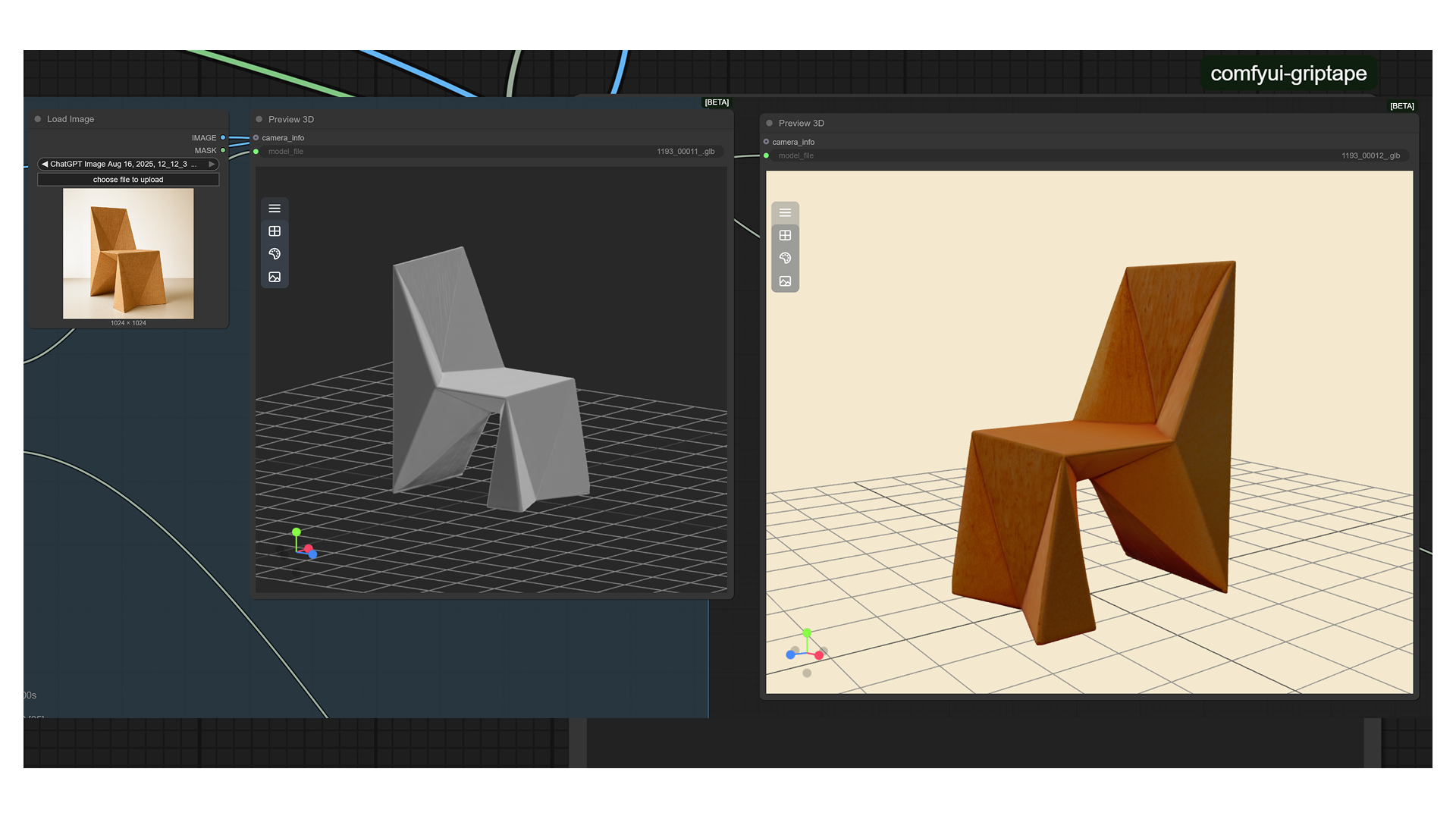

comfyUI Workflow

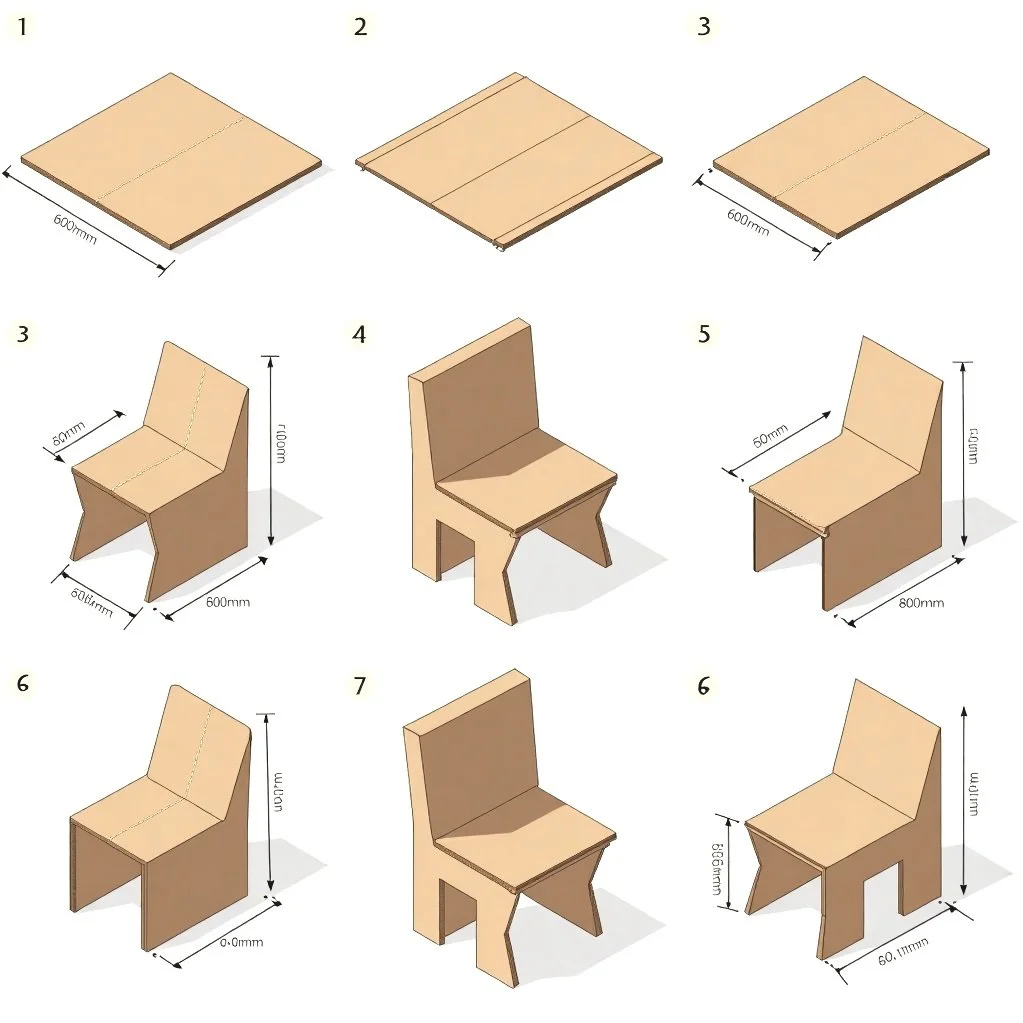

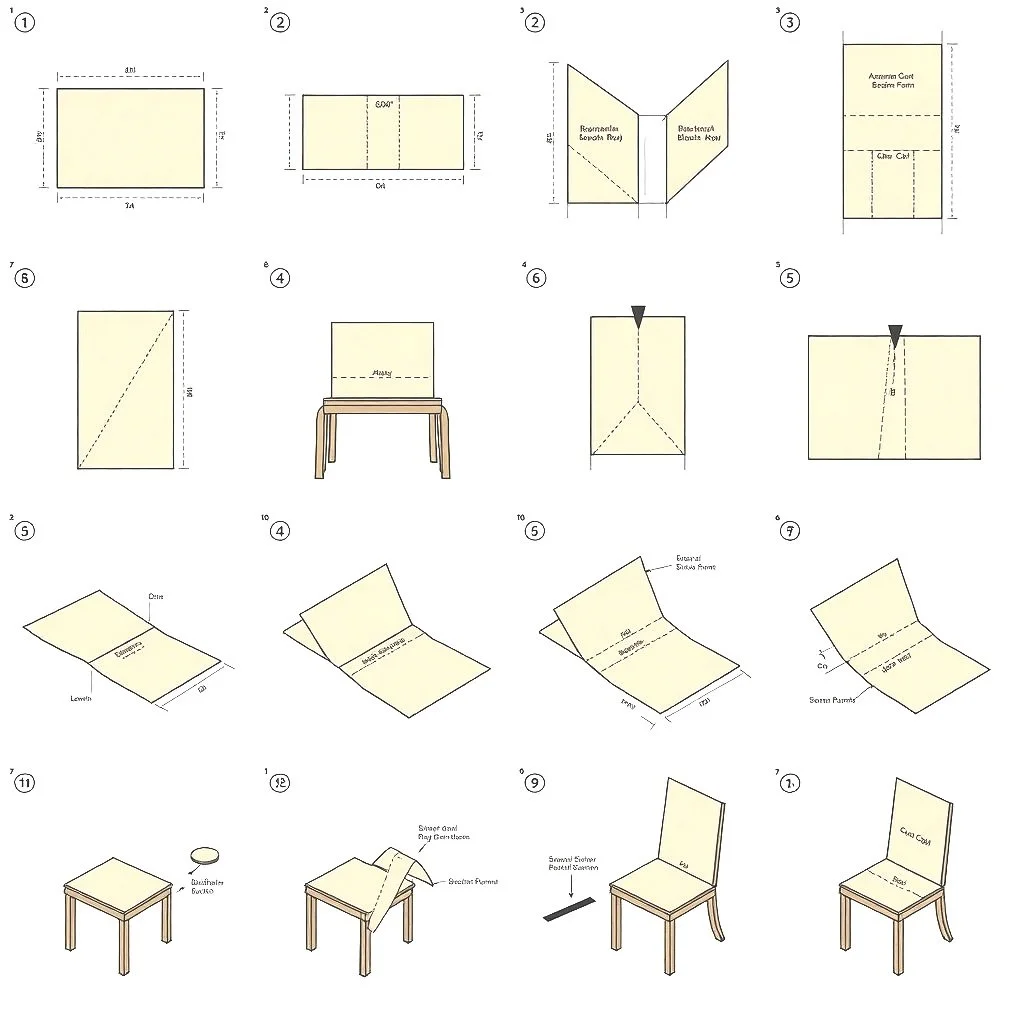

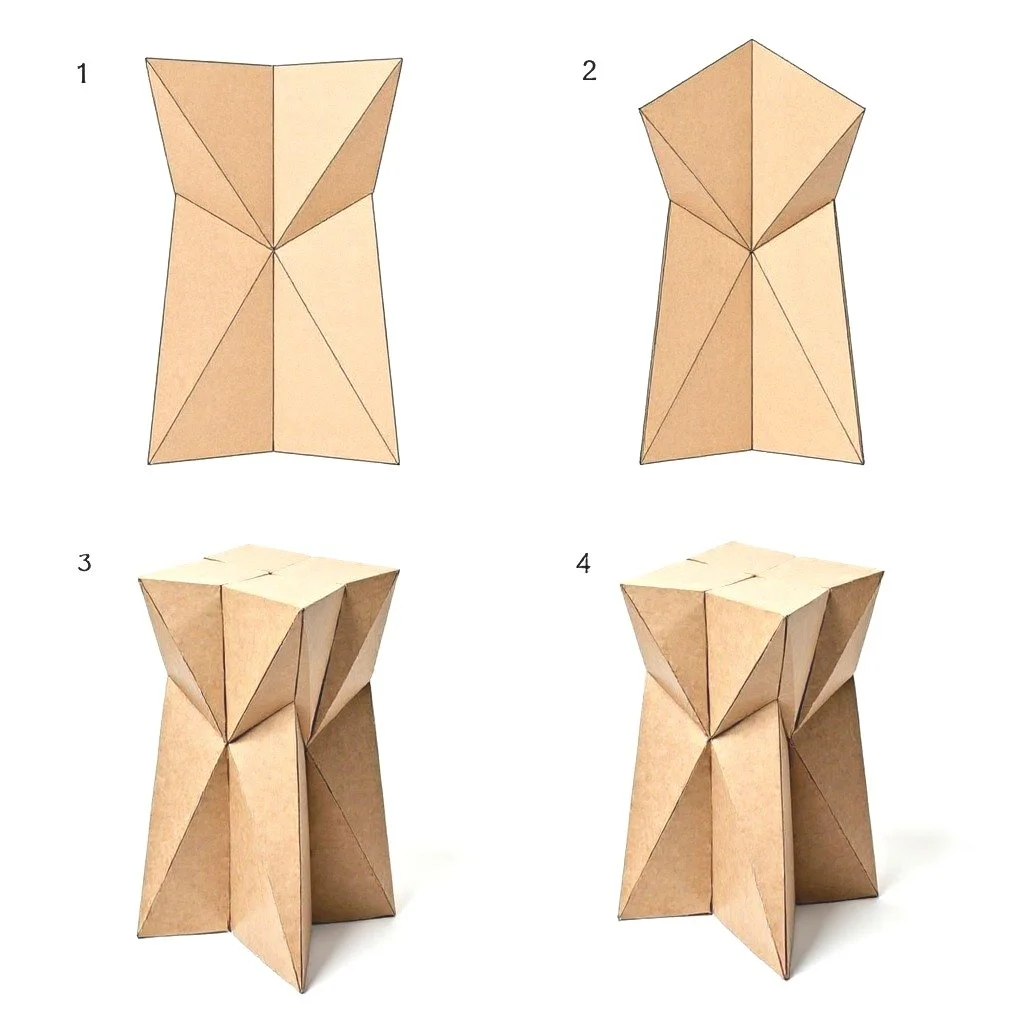

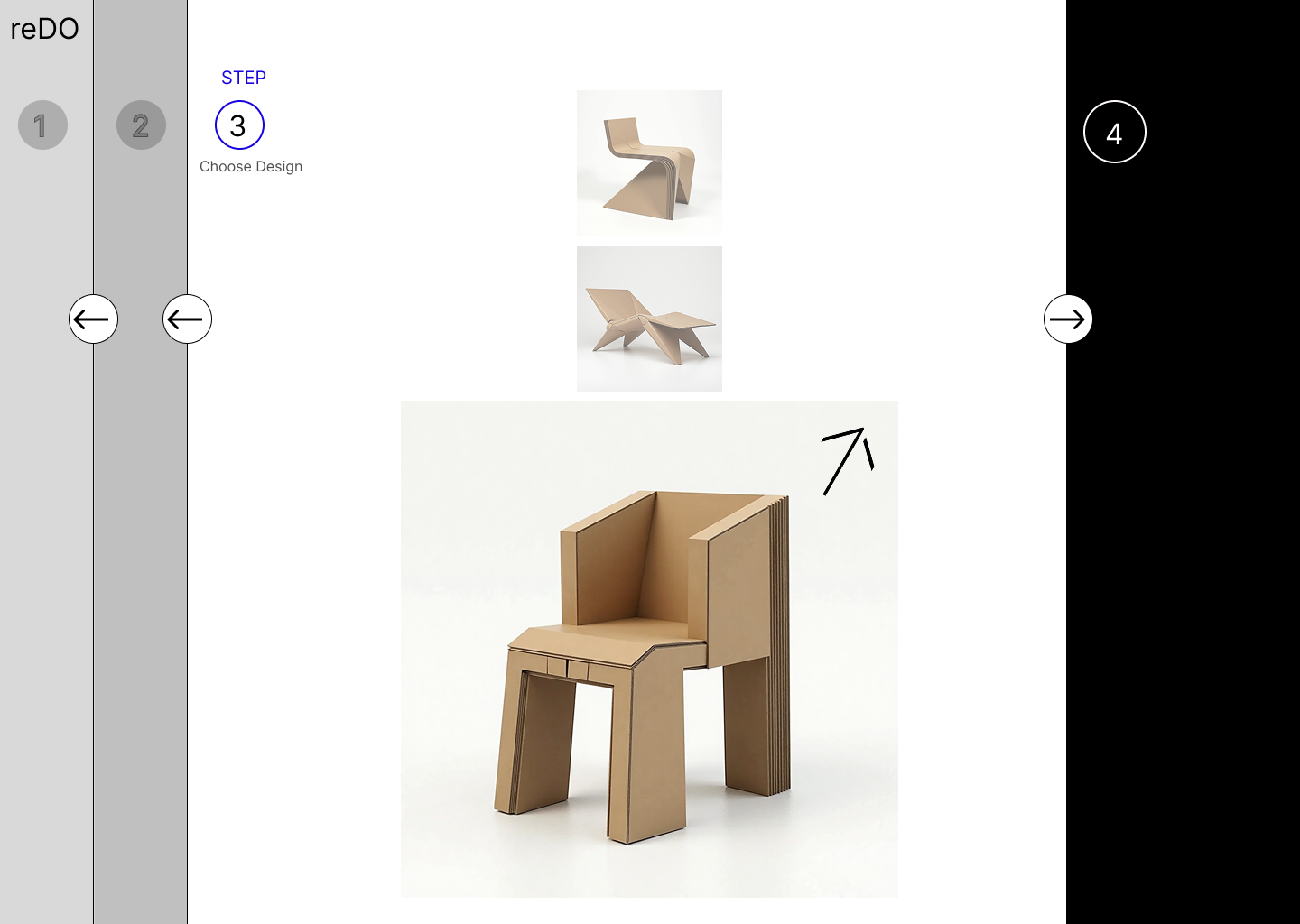

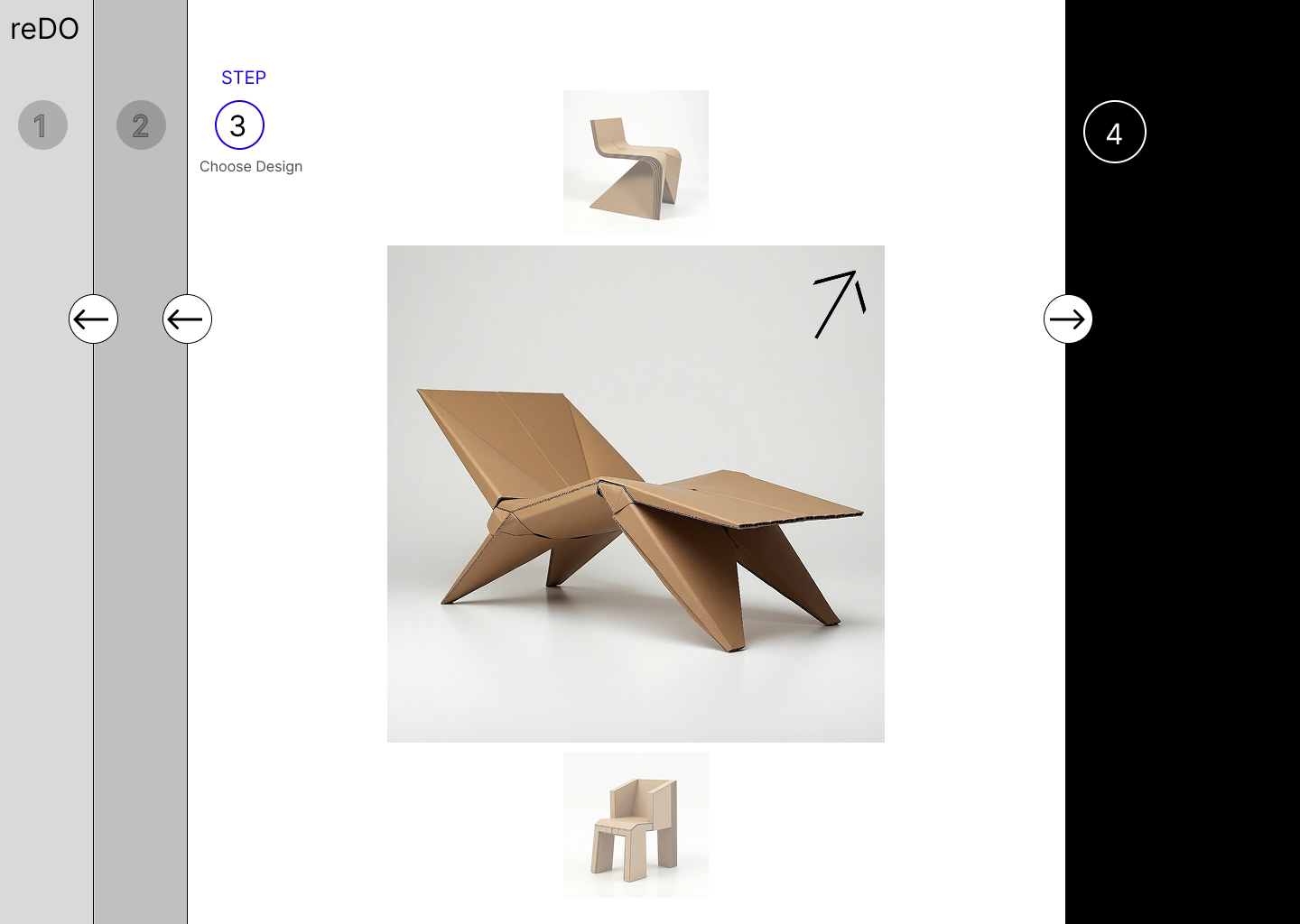

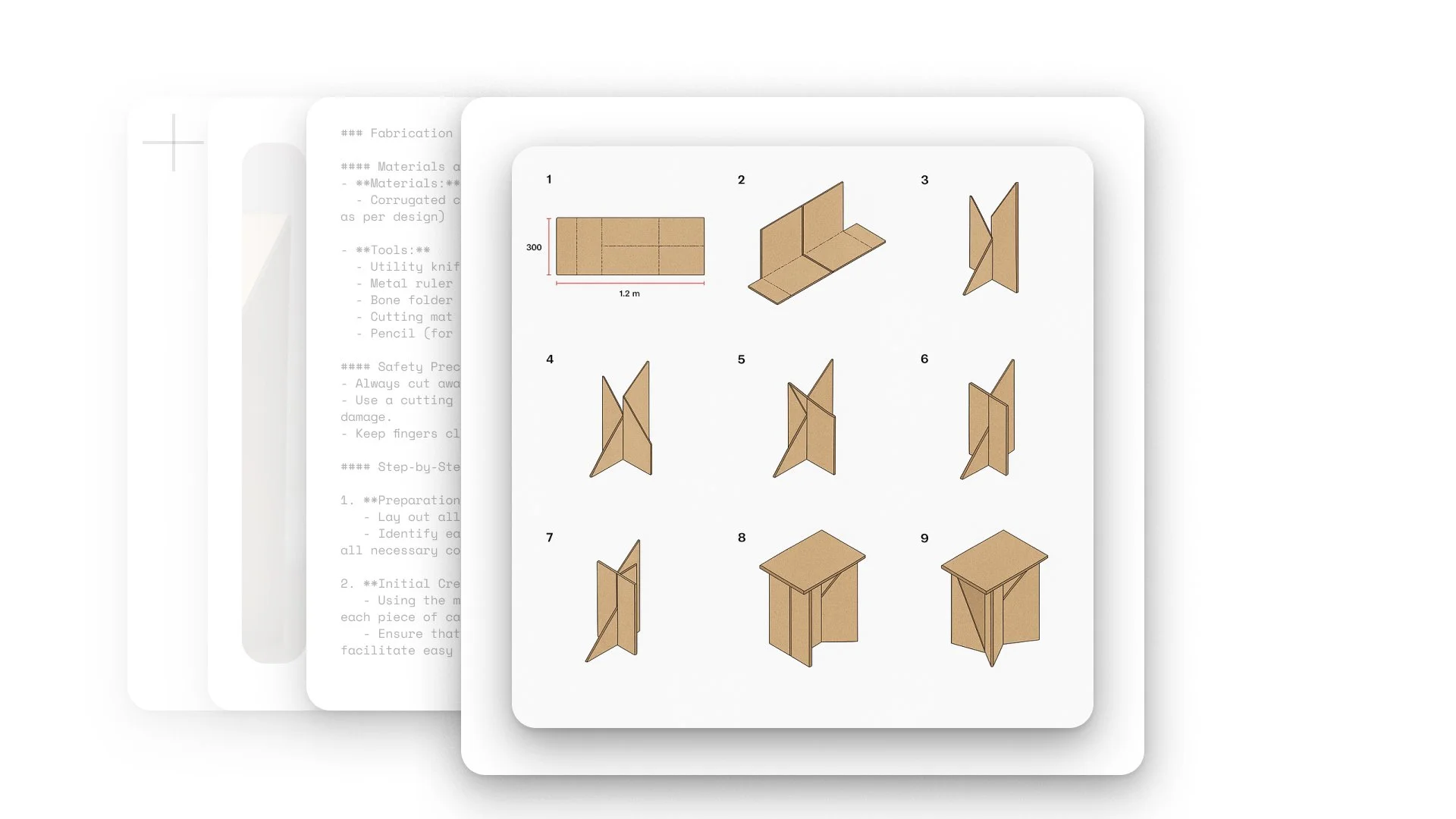

All the designs in the video use waste cardboard paper as their image input. Each output is a buildable architectural form generated in a single click, complete with assembly diagrams and logical construction sequencing. All designs were developed using the RAG workflow, grounded in fabrication-aware logic.

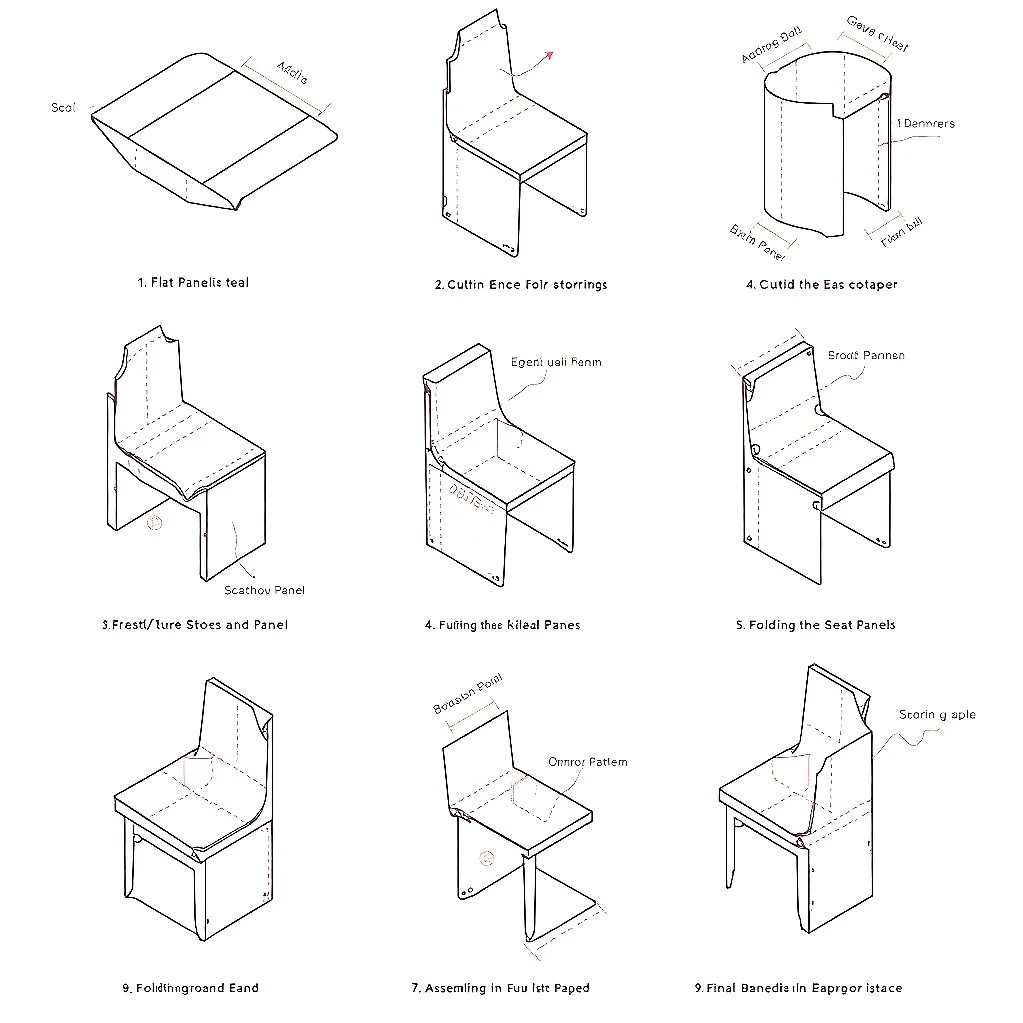

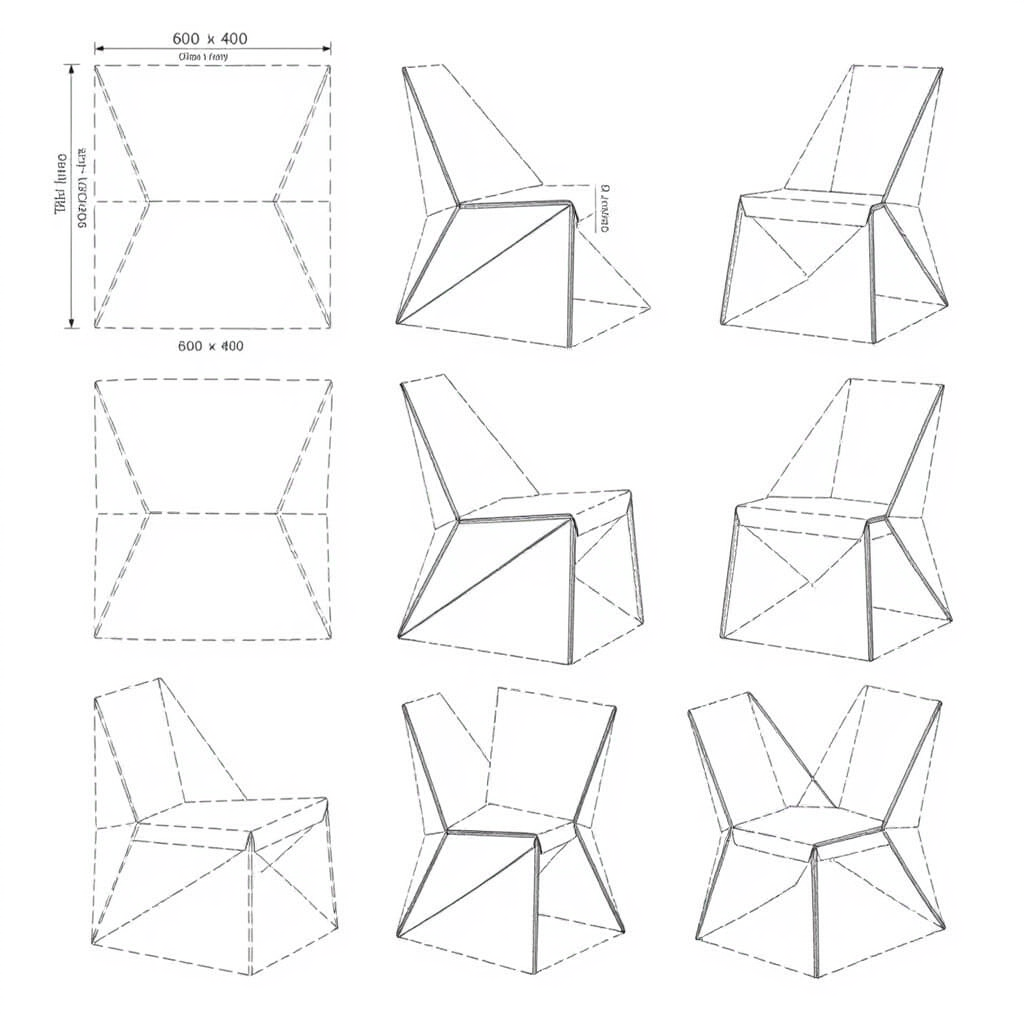

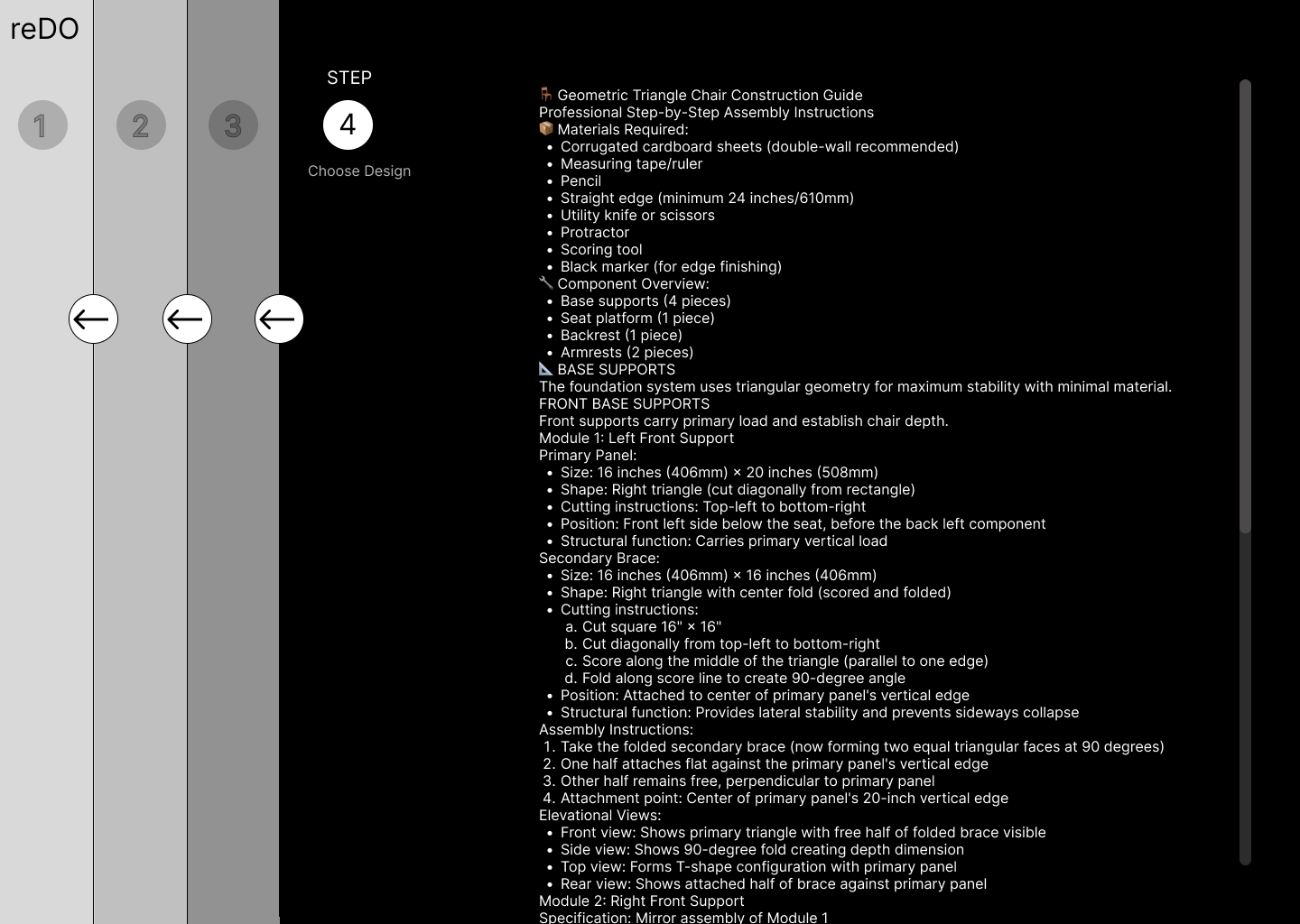

The following images are examples of step-by-step visual guides generated using LLMs and image generation tools on Flux Kontext. The goal is to continue refining this workflow to achieve greater accuracy and reliability. The current results are quite satisfactory, though there remains room for improvement.

phone UI and website UI was designed in figma

Rapid visualization/rendering and concept iterations by leveraging AI to prototype at speed and scale.

Mentors-

Casey Rehm

Case miller

Eli Joteva

Anthony Tran

Sophie Pennetier